(I hope the OP is still interested in whats going on..  )

)

So,

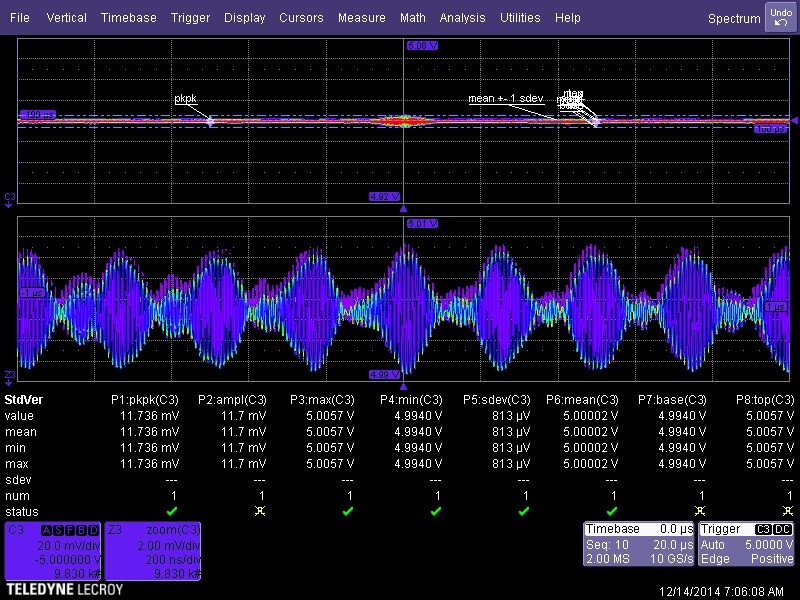

You have shown the noise level (sDEV) and the zero offset DC value, both look low and accurate respectively, however, looking at the scopes stated offset accuracy (as incomplete as it may be, thats all I have to go with with regards to theoretical/worst case accuracy limits):

"Offset Accuracy: Fixed gain setting < 2 V/div: ±(1.5% of offset value + 0.5% of full scale value + 1 mV)”

There is a (large) error component related to the offset value, so in your example it would be 1.5% of 5V ~75mV, obviously your scope seems much better than that due to the correlation with the DMMs, but if you want to validate how good it really is you need to do so at that operating point, which as you mention is a different and difficult measurement problem.

Due to the above, I am still having a hard time accepting that the scopes MEAN value is a more accurate ”DC only” value than a (accurate) DMM, but that is my problem

I am not in anyway challenging the fact that large bandwidth is required for such analysis (I am far from being knowledgeable in this field, from my little experience, high frequency artifacts from DCDC converters are a problem and high bandwidth as you mention is required to understand and solve these issues), it is just the DC accuracy that I am struggling with. In the past we have done similar measurements using AC coupling (to minimize scope errors) to see all the non DC energy, and used an accurate DMM to determine the ”MEAN” value, may be a mistake on our part, although I am not yet convinced...but then again, my problem

Thanks,

Ran

I still have to trust what my bench results say...as they are a reference. So let's say I was out by X, and my device under test exhibited acceptable sDEV from "spec". Then I have to accept, that if those results beat a prediction....they offer greater accuracy than the prediction/simulation. I would trade accuracy for resolution any day, as long as the resolution keeps my device at optimal operating specifications. I would trust those results, far above what any manufacturer datasheets tell me. Unless there was some completely wild discrepancy...and if that happened, the chances of a large cross section of production devices working well is seemingly low (yield).

Keep in mind, that the DMM may represent DC accuracy, with a steeper filter slope/isolation from AC....BUT the AC is, in reality, there. If the AC is low enough in frequency for the DMM to capture it, and add it to the DC (many DMM have AC+DC measurement for this reason), then you more fully characterize the total power quality of the device under test. That is a quantitative rule in formulating "power quality". It's always the sum of the total components at the point of load.

Also, a DMM is a mean value across the integration time, minus the total counts equation. Obviously the higher the total polling rate, the more accuracy you will have. Obviously the higher the total integration time is, you will lose accuracy, but GAIN resolution. This is why I like to have 2 DMM, that can both do trend/min/max/avg readings. I can set each at an offset integration time (one always < other) and try to see a higher degree of accuracy, by margining BOTH results (over time).

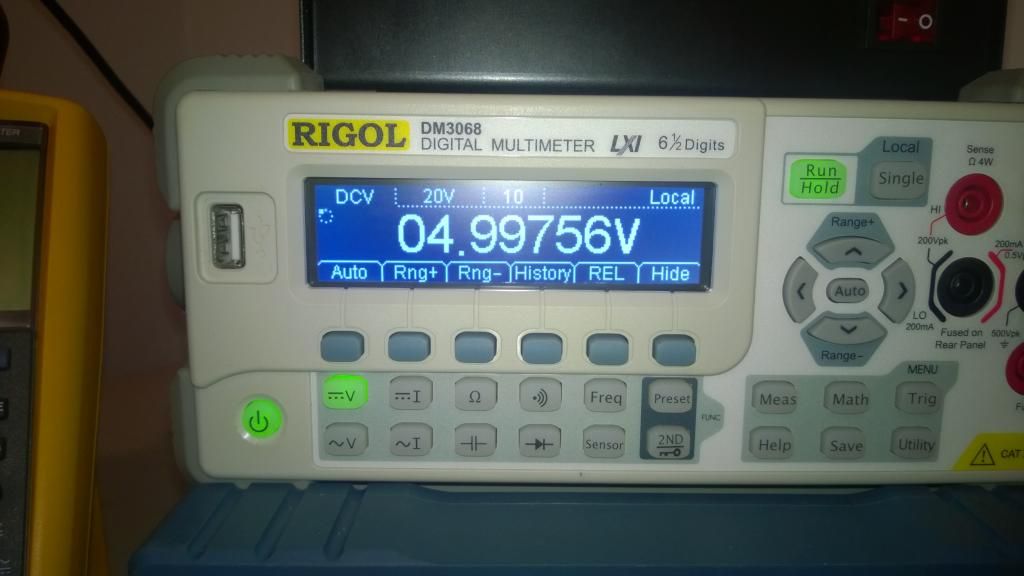

Here is another screengrab....with the same interpolation pre-processing on the signal. HOWEVER, this time I calibrate the scope against the reference.....so now the scope IS the reference. Do you see my point now about calibrating at least ONE device in the t&m chain to an accurate reference? Remember my REF is the LEAST accurate device in this signal chain. That is often the case with a consumer good that is being tested. So let's make a scenario here...

I calibrate ALL devices in the test system against a known stable reference. I then measure the total offset between the entire system....and then use that sDEV as a re-calibration point for the measurement output. It's a vastly more accurate way of obtaining a larger cross section of results, that meet "spec". The other way will have a larger statistical deviation from one point of known reference. This way i maintain traceability across each piece in the test system + each device tested, with a realistic sDEV across the total "yield". Obvious failures will be more obvious. Devices that exceed "spec" can be pulled and analyzed...to gain insight on WHY they are "better" and that can possibly lead to implementing those discoveries....to further yield a higher standard. Traceability is king, above any manufacturers spec book.

NOW, if the min max sDEV was supplied for each unique piece of test gear...and all items could be verified on site (of the test) then I would be MUCH more inclined to believe those statements OVER my measurements. Especially if the test gear used to verify the system was provable as "more traceable" than the system. This is why a maintained set of reference standards are important. At the end of the day, you HAVE to have your own internal references, as daily or weekly calibrations against external standards can kill you in downtime and buy in (if one set of gear is always out for cal, you need two sets....).

The most accurate reading available...is not the DMMs or the scopes (notice the plurality there). The most accurate reading is the mean of the sDEV average of everything in the test chain....minus/plus the results from the hardware under test.

I hope that lends an answer as to why I would say I "trust" the mean result on my scope, more than the mean result on my DMM. The scope is verified as being far more accurate than the datasheet says. The DMM is, however not classified in the same way. I am not sure my point about accuracy is being understood.....or it's quite possible that I have poorly articulated it. The accuracy of my scope is higher, in relation to total power quality, than the DCV reading on my dm3068. The specs for the dm3068 are even more "incomplete" than on the MXi. Is the DC accuracy "better" than the dm3068? That is not provable without some more investigation into the dm3068. However the ref being used says that if I calibrate against my scope reading, I have more real world representation of the total power quality at the point of load.

I also hope OP is still around, as these tests all mirror the exact scenario his device will run into.....but I also think some other people might gain some insight from this discussion. It's quite an examination about core fundamentals of oscilloscope usefulness. I think i have also shown some tests, that others can do to see just where their t&m sDEV curve stands. I view that as quite important and a good investment of time/effort.

BTW the measurement discrepancy between the scope and the dmm is 0.009276V (DC-5ishMHz. Oddly enough that is virtually identical to the discrepancy between the sDEV of the Fluke 289 and the sDEV of the dm3068. Too close to be coincidence. I think the dm3068 needs to be checked against a standard. I need to send out my 5v lab standard to be checked. If the 3068 has drifted that much since cal something isn't right? I did recently move it and change the environmental control average.