...

wouldn't have said it better.

Mentioning PICs, some of the proposed improvements are already part of the XC compilers, others are requests that clearly come from assembly programmers who can't stop thinking in assembly and ostentably write in what i call "Clever C" (forgetting that you CAN, and some time must, use separate assembly modules. Just conform to the ABI and call them as C functions so the compiler is happy, other programmers will be as well)

Dedicated strings requrie heap and personally speaking in a C project i prefer to avoid heap. In languages in which is a given i simply apply another mindset (using it responsibly of course)

I've only mentioned the kinds of things raised by very experienced hardware/software engineers that rely heavily on PIC, their needs, concerns and frustrations are real, not imagined, I am simply collating broad issues and concerns raised by people who find C restrictive or inflexible.

I do hope you aren't planning a language that is specific to current PIC processors and current PIC peripherals.

If that isn't the case, what will your strategy for inclusion/exclusion of a language feature? Either a small language that is all useful with all processors/peripherals. Or a large language that contains all possibilities, only some of which are exploitable with given processor/peripherals.

Without being able to articulate your strategy (to an audience including yourself), you will flail around in different directions.

These are good points. No, there is no special focus on PIC products, I just wanted to include some of the problems experienced in that domain, into the overall picture, if there was some very obscure thing, specific to some very narrow device then there's little to be gained from considering that unless the effort is not too high.

As for inclusion/exclusion strategies I think that's a fascinating question and I've not thought about it in any real detail yet.

It would include some kind of classification of language features I suppose. I suppose one could start listing features (whatever these get defined as) and then tabling these for their utility across a range of devices.

In fact this is a very good question and I might take a stab at starting such a table with a view to having people more expert than I, contribute too and comment on.

I suppose we'd even need to define an MCU for the purpose of creating this tabulation.

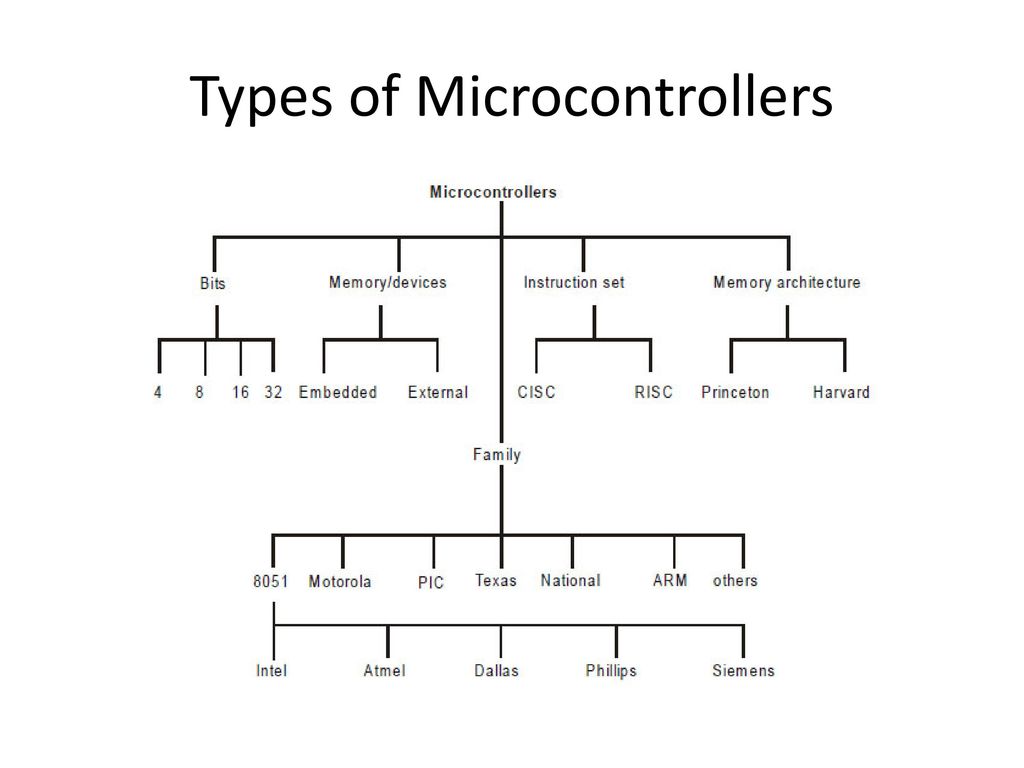

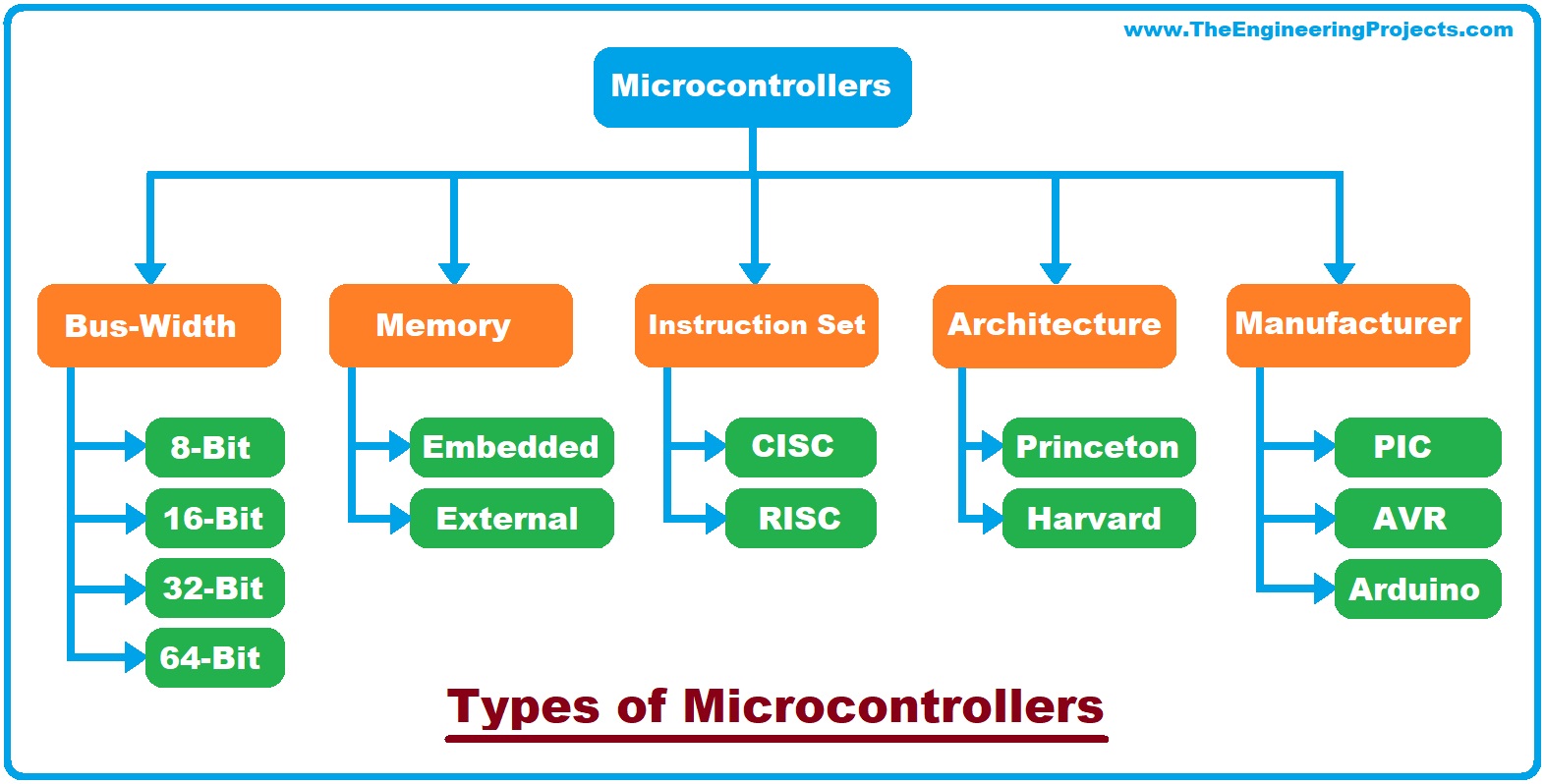

At a first pass, these things might be inputs to how we do this:

and

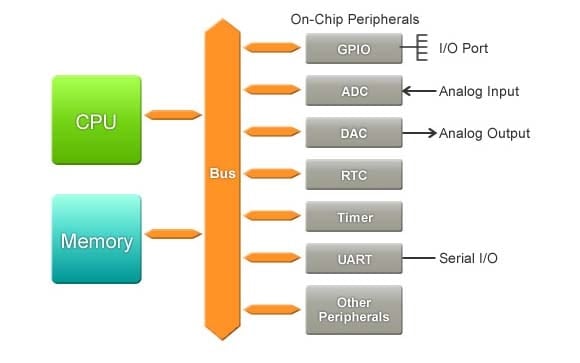

Of course there are the peripherals to consider too.

Whether the language could or should abstract some aspects of some peripherals is an open question for me, ADC's are ADC's but there are sometimes fiddly, idiosyncrasies specific to some that are not seen in others, this is where my own knowledge is rather weak.

The bus width is a trait familiar to any language designer, so we expose numeric types that reflect that to some extent.

Interrupts are a clear thing to consider too, as are exceptions, also memory allocation, there are several ways to manipulate memory but most languages limit this to the concepts of static, stack and heap and offer limited way to interact with these, perhaps this is something that could be incorporated.

Anyway you do raise some important questions, clearly there is more to be done in this regard.